[TL;DR 2023 = generative AI, 2024 = humanoid robotics, 2025 = ???]

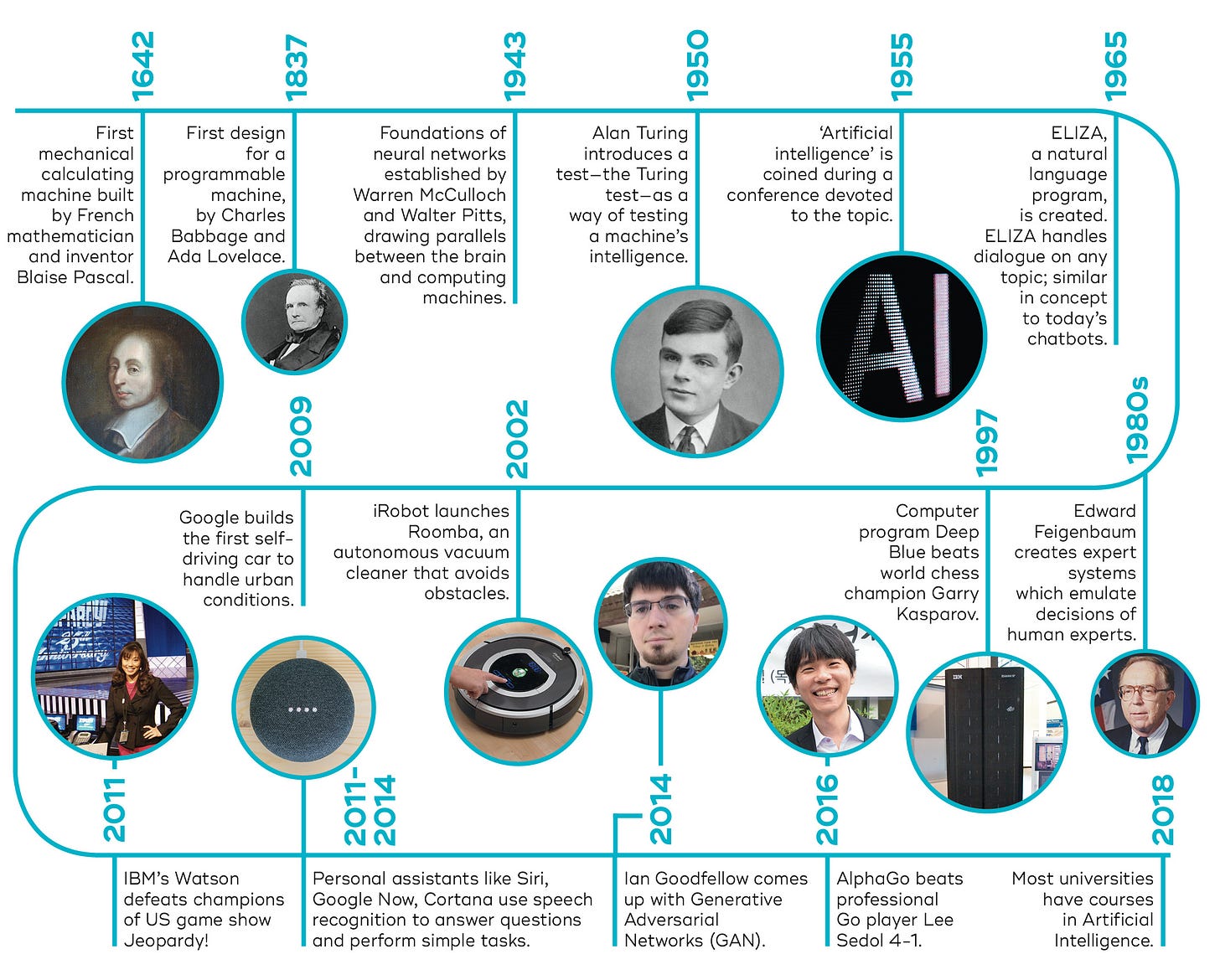

AI, or artificial intelligence, has been a hot topic as of late. However, some might not understand what ‘AI’ really means.

Google mentioning AI about 25 times throughout their latest keynote

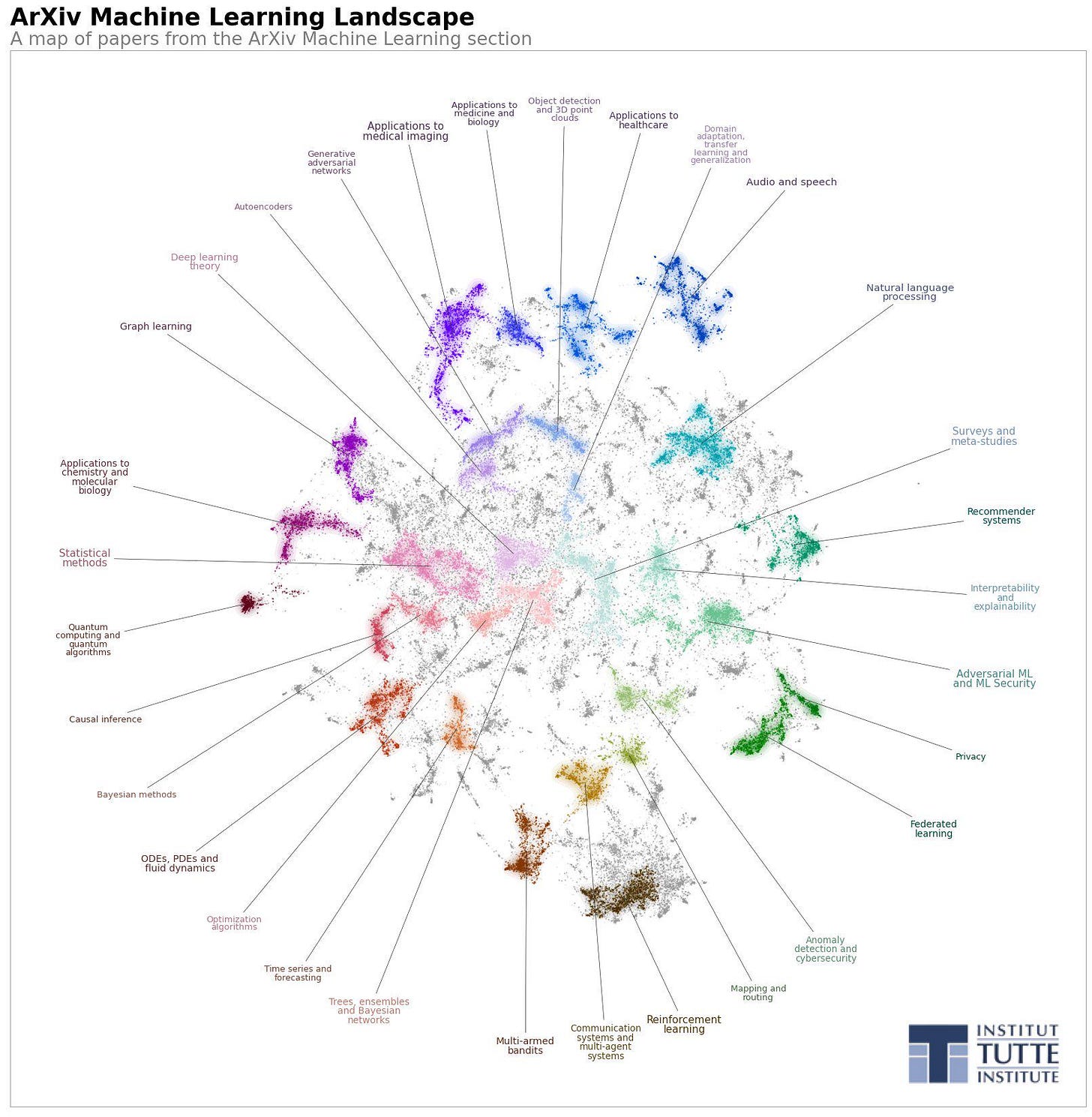

When Big Tech companies refer to AI they are most commonly referring to deep learning applications: deep learning is a specialized form of machine learning, but what is machine learning?

Machine learning is often characterized as a manifestation of statistics and this technology is nothing new.

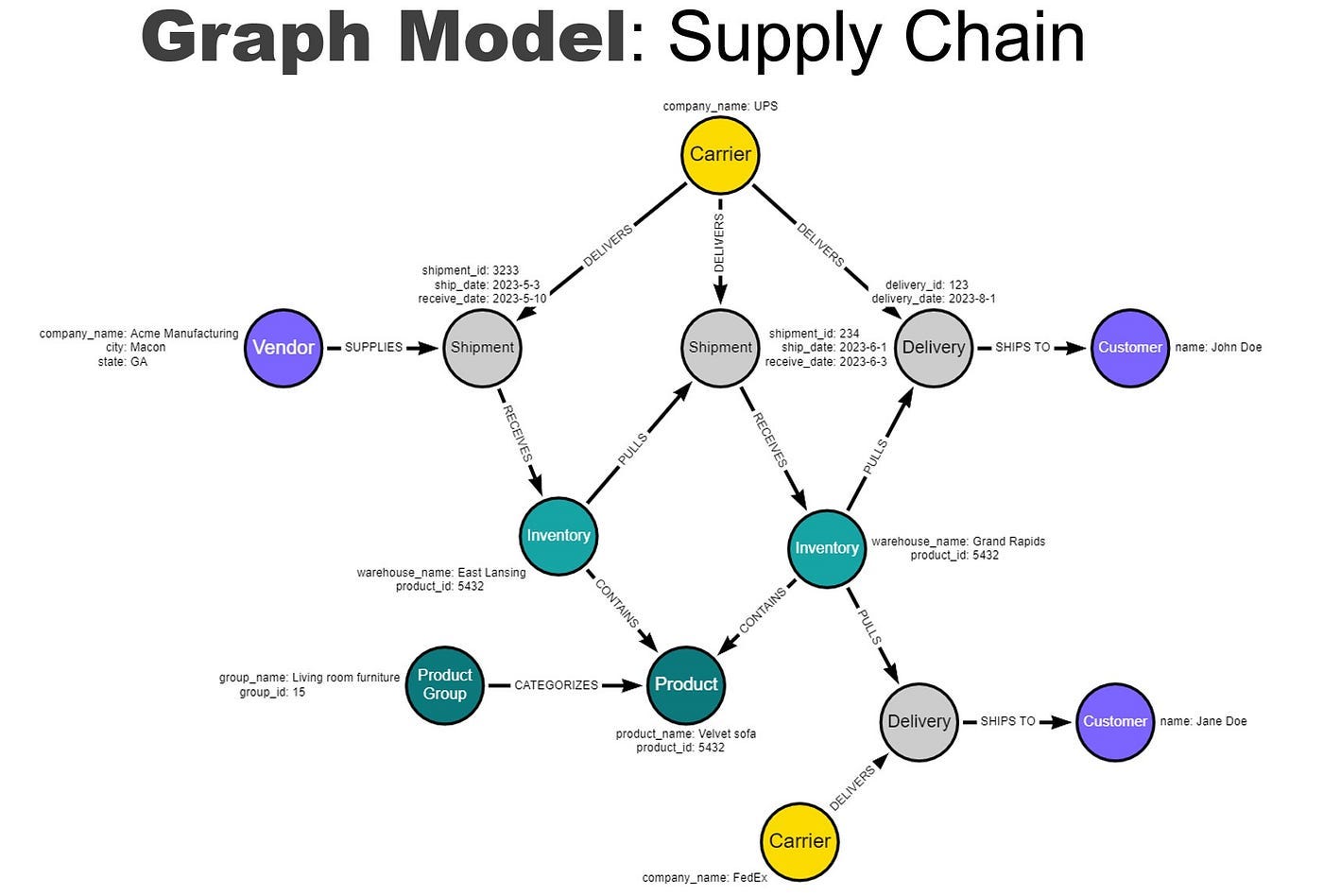

The term AI can encompass many forms of mathematics such as knowledge graphs or logic engines, but these are not classified as machine learning. The key difference between AI and machine learning is that an ML system is able to learn from new data.

So if deep learning is what people are actually excited about, what is that exactly?

Deep learning relies upon an intersection of AI and data science, leveraging deeply layered neural networks to learn hierarchical representations from big datasets, which enables predictive analytics, classification, regression, generation, and more!

Neural Nets

Latent Diffusion

AI Ethics

Many are ringing the alarms concerning the ethics of deep learning models and the dangers of super-intelligence. However, to have any ability to consider this in any practical sense we must focus on model interpretability to truly understand what is going on behind the scenes.

AGI

HellaSwag: Can a Machine Really Finish Your Sentence? (May 19, 2019)

“In this paper, we show that commonsense inference still proves difficult for even state-of-the-art models, by presenting HellaSwag, a new challenge dataset. Though its questions are trivial for humans (>95% accuracy), state-of-the-art models struggle (<48%). We achieve this via Adversarial Filtering (AF), a data collection paradigm wherein a series of discriminators iteratively select an adversarial set of machine-generated wrong answers.”

How does physics connect to machine learning? (Jaan Li)

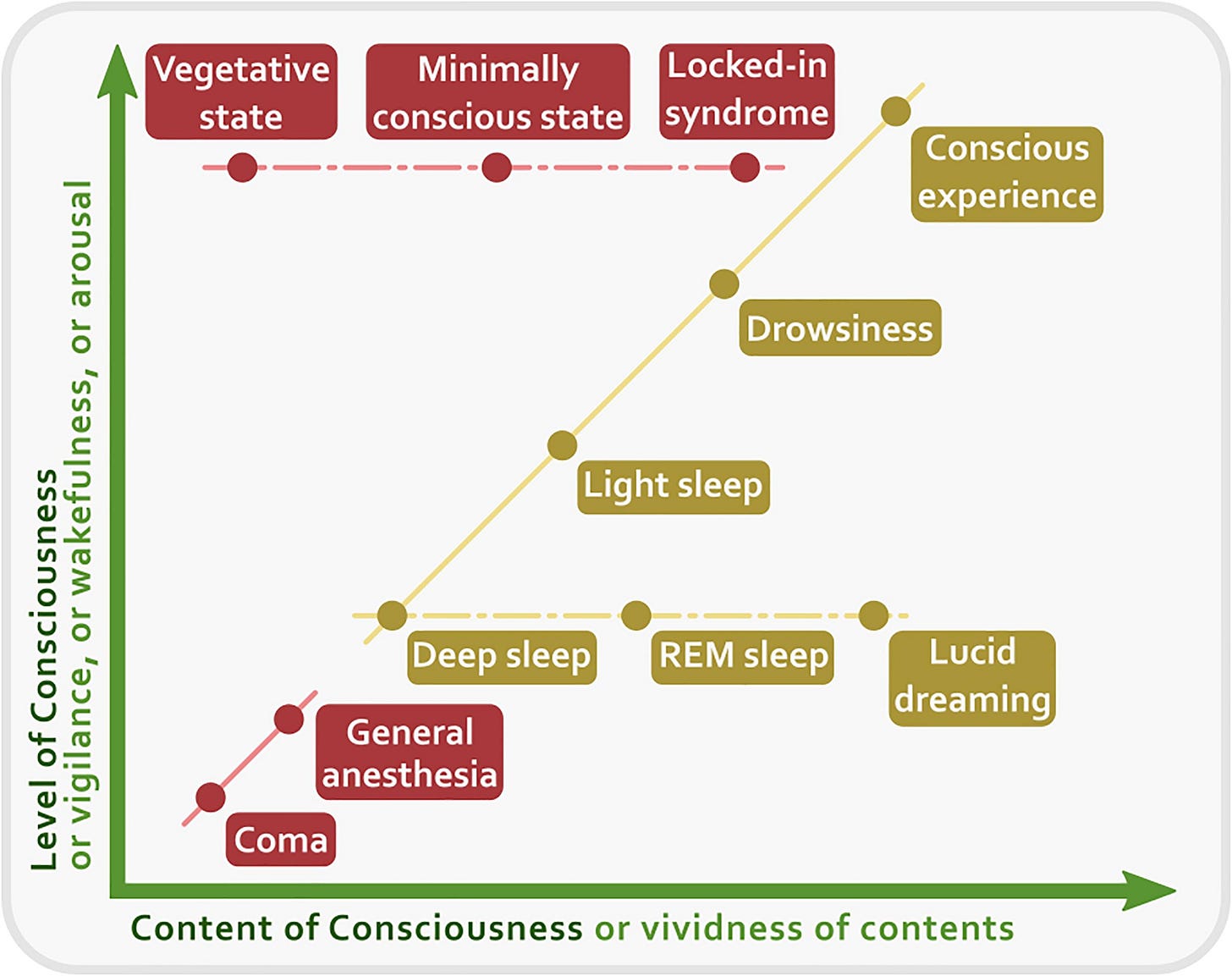

Measures of Consciousness

Processing Power

Semi-annual update of the top 500 ranking supercomputers in the world (top500.org)

The current approach to improving model performance seems to be exponentially increasing parameter sizes. The reality is that we must make models more efficient to run on less powerful devices like phones and be more energy efficient. It is a matter of working smart versus working hard.

Most deep learning models run off of powerful GPUs, typically Nvidia due to CUDA core compatibility with mainstream software, and this is either done by cloud with AWS/Azure servers or an expensive computer. Apple Silicon recently started a huge trend where manufacturers are prioritizing ARM chips due to their affinity for machine learning calculations and power efficiency. Now, Intel, AMD, and other chip manufacturers have also begun to release products in this regard.

One huge problem we will encounter is the exponentially increasing difficulty of fitting more transistors into the same space. The physical size of each transistor has become so small that quantum effects are starting to interfere with the electronics. As we gradually approach the limit of the current transistor paradigm we must consider where to go next.

A few prospects are emerging which could push us to a new era of computing including quantum computing and superconductors, but ultimately the effects of quantum tunneling when dealing with < 5 nanometers means that chips will just have to grow in size for compute hungry tasks.

IonQ’s Calculus to Commercial Quantum Computing Advantage — IONQ

Revisiting The Economic Impact of Automation

Can We See the Impact of Automation in the Economics Statistics?

— Uncharted Territories, popular Substack

TASKS, AUTOMATION, AND THE RISE IN U.S. WAGE INEQUALITY

(MIT Economics, September 2022)

Physical AI

Bio-Computing

It is hard to determine when a computer will obtain consciousness or surpass the computing power of a human brain. The question itself is hard to determine: Can binary programming mimic natural neurons? What is the theoretical computing power of a human brain? Are our neuron interactions quantum based? (Our noses are) How do we define what is consciousness?

Ultimately, biological systems may be more robust. There is so much more to learn from the mechanisms of nature. Life is truly the ultimate technology. You may be shocked to discover that the intelligence of individual cells is underestimated. I will be covering more about Michael Levin’s research in another post!

Moreover, there is so much more about neuroscience that we have barely discovered.

The most advanced computers may eventually be biological computers with engineered neurons.

AI made from living human brain cells performs speech recognition (New Scientist, Dec 11, 2023)

~80% accuracy in identifying voices (keep in mind this is a first prototype!!!)

Further Readings

arXiv is a free distribution service and an open-access archive for nearly 2.4 million scholarly articles in the fields of physics, mathematics, computer science, quantitative biology, quantitative finance, statistics, electrical engineering and systems science, and economics. Materials on this site are not peer-reviewed by arXiv.

— Has a lot of the newest deep learning papers